Symbol emergence in robotics is a constructive approach to symbol emergence systems and is a field closely related to cognitive and developmental robotics. We aim to develop a robot that can autonomously adapt to physical and semiotic environments through interactions.

1. Concept Formation and Representation Learning for Robotics

Children acquire various behaviors by observing the daily activities of their parents. In addition, children learn many words and phrases through listening to their parents’ discourse. Is it possible for us to build a robot that can incrementally obtain various behaviors and words? Although children learn languages naturally in their daily lives, learning language is still an open challenge in current robotics research.

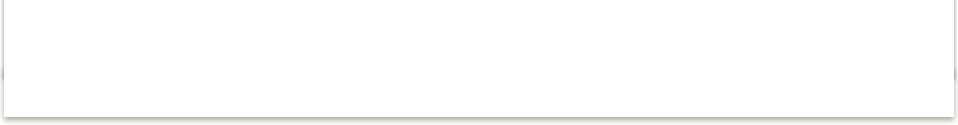

There are also many challenges regarding concept formation and representation learning. For example, a robot usually cannot clearly perceive its location from its sensory information. This is generally considered a problem in robotics. However, humans seem to perceive their location not only physically but also semantically. Therefore, we are trying to combine the language acquisition process of such words for the place with the localization and mapping process.

Multimodal categorization and concept formation is a series of research in which we try to create a robot that can obtain its concepts and categories on its own.

Following these contexts, our laboratory has been conducting a series of studies related to concept formation, (internal) representation learning, and lexical acquisition.

Publication

- Akira Taniguchi, Yoshinobu Hagiwara, Tadahiro Taniguchi, Tetsunari Inamura, Improved and scalable online learning of spatial concepts and language models with mapping, Autonomous Robots, 44(6), 927-946, 2020. DOI: 10.1007/s10514-020-09905-0

- Akira Taniguchi, Tadahiro Taniguchi, and Tetsunari Inamura, Unsupervised Spatial Lexical Acquisition by Updating a Language Model with Place Clues, Robotics and Autonomous Systems, 99, 166-180, 2018. DOI: 10.1016/j.robot.2017.10.013

- Tadahiro Taniguchi, Shogo Nagasaka, Ryo Nakashima, Nonparametric Bayesian Double Articulation Analyzer for Direct Language Acquisition from Continuous Speech Signals, IEEE Transactions on Cognitive and Developmental Systems, 8(3), 171-185, 2016. DOI: 10.1109/TCDS.2016.2550591

2. Service Robotics: Approach based on Symbol Emergence in Robotics

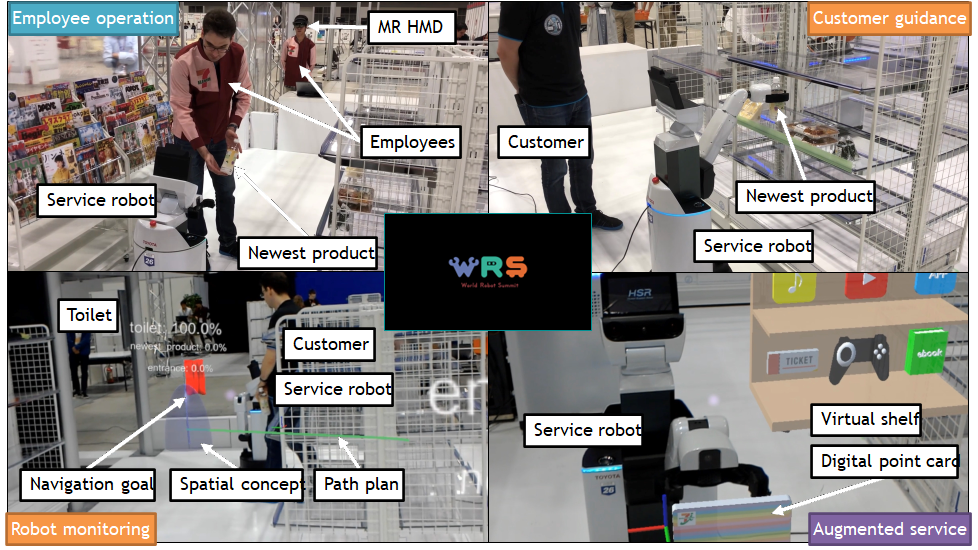

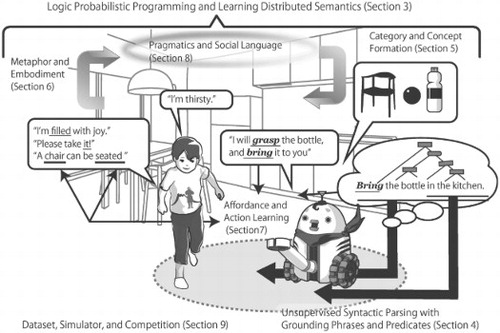

Developing a robot that can communicate with people and conduct daily tasks in our living environment is a challenge in artificial intelligence (AI) and robotics. Not only for full automation in factories and commercial environments but also for realizing an efficient work-from-home lifestyle because robots that people remotely operate must conduct many tasks in a semi-autonomous manner.

In contrast with conventional AI studies, e.g., image recognition and machine translation, for specific tasks, a robot needs to deal with a wide range of tasks with its single body and an integrative cognitive system.

Conventionally, a rule-based approach with hand-crafted codes has often been used to develop a service robot. However, that is not sufficient to build a robot. A machine learning-based approach is important to deal with a wide range of tasks and interactions in a world full of uncertainty.

Our laboratory has been conducting a wide range of studies on service robotics with the idea of “symbol emergence in robotics.”

Also, we have joined many robotics competitions, including RoboCup@Home and World Robot Summit (WRS), where we have received multiple awards.

Publication

- L. El Hafi, S. Isobe, Y. Tabuchi, Y. Katsumata, H. Nakamura, T. Fukui, T. Matsuo, G.A. Garcia Ricardez, M. Yamamoto, A. Taniguchi, Y. Hagiwara, and T. Taniguchi, System for augmented human-robot interaction through mixed reality and robot training by non-experts in customer service environments, Advanced Robotics, 34(3-4), 157-172, 2020. DOI: 10.1080/01691864.2019.1694068

- Akira Taniguchi, Shota Isobe, Lotfi El Hafi, Yoshinobu Hagiwara, Tadahiro Taniguchi, Autonomous planning based on spatial concepts to tidy up home environments with service robots, Advanced Robotics, 35(8), 471–489, 2021. DOI: 10.1080/01691864.2021.1890212

- Tadahiro Taniguchi, Lotfi El Hafi, Yoshinobu Hagiwara, Akira Taniguchi, Nobutaka Shimada, Takanobu Nishiura, Semiotically Adaptive Cognition: Toward the Realization of Remotely-Operated Service Robots for the New Normal Symbiotic Society, Advanced Robotics, 35(11), 664-674, 2021. DOI: 10.1080/01691864.2021.1928552

3. Language and Robotics

Understanding and acquiring language in a real-world environment is an essential task for future robotic services. Natural language processing and cognitive robotics have focused on the problem for decades using machine learning. However, many problems remain unsolved despite significant progress in machine learning (such as deep learning and probabilistic generative models) during the past decade. Our research focuses on the intersection of robotics and linguistics studies, emphasizing embodied multimodal sensorimotor information humans receive and exploring the possible synergy of language and robotics.

A wide range of studies related to language has also been conducted, including syntactic parsing using distributed representations.

Publication

-

T. Taniguchi, D. Mochihashi, T. Nagai, S. Uchida, N. Inoue, I. Kobayashi, T. Nakamura, Y. Hagiwara, N. Iwahashi & T. Inamura, Survey on frontiers of language and robotics, Advanced Robotics, 33(15-16), 700-730, 2019. DOI: 10.1080/01691864.2019.1632223

- Tada, Y., Hagiwara, Y., Tanaka, H., & Taniguchi, T. (2020). Robust understanding of robot-directed speech commands using sequence to sequence with noise injection. Frontiers in Robotics and AI, 6, 144.doi.org/10.3389/frobt.2019.00144